Using a database index for report performance

3 Tasks

1 hr 30 mins

Scenario

Front Stage wants to investigate the impact of expanding its address search capability to include filtering on street addresses that start with a certain number. They are aware of a source of actual address information that they can use: OpenAddress. Front Stage is concerned that, as the Address table grows larger, and with greater use of address search, query performance could impact their ability to efficiently process cases.

The following table provides the credentials you need to complete the challenge.

| Role | User name | Password | User type |

|---|---|---|---|

| Admin | Admin@Booking | rules | Application |

| pgAdmin4 | pega | pega | Database |

Note: This challenge can only be completed via Linux Lite VM as need to have database access.

Analyze the possible performance impact of expanding the address search capability to include filtering on street addresses. To do this, you first insert a large amount of test data into the FSG-Data-Address table, customerdata.fsg_data_address.

Next, you test a street filter condition to use with the Haversine formula. You do not need to modify the HaversineFormula Connect-SQL rule. Instead, execute the modified query from the PostSQL pdAdmin4 tool. Analyze the query and prefix the query by using EXPLAIN (ANALYZE true, COSTS true, FORMAT JSON). You first execute the query and note the result. Then, you add a database index to the street column. Execute the query again and compare the result from your first run.

Detailed Tasks

1 Review solution details

Consider indexing a table column that is used as a filter criterion in the SQL query.

2 Configure indexing

To complete the assignment, accomplish the following tasks.

- Download the address data from the following link: Excercise.zip (Openaddr Collected US Northeast File).

- Transfer the .zip file to the

$/home/architect/Desktopdirectory. - Load the .csv address data into the PostgreSQL database by using the query tool of pgAdmin4.

- In the Exercise file, open .sql file fsg_data_address_import.sql and execute.

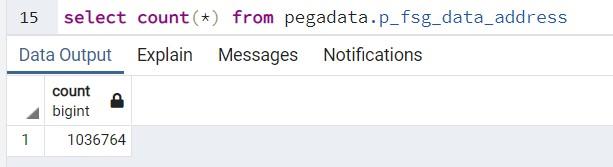

- Execute the following query to verify that all the records are loaded successfully.

Select count(*) from customerdata.fsg_data_address - Instead of modifying the Haversine formula by adding a filter criterion such as AND street LIKE '1 ROGERS STR%', perform a simple query within pgAdmin4 against the address table to analyze statistics. The following table is an example.

EXPLAIN (ANALYZE true, COSTS true, FORMAT JSON)

SELECT pyguid AS pyGUID,

reference AS Reference,

isfor AS IsFor,

street AS Street,

city AS City,

state AS State,

postalcode AS PostalCode,

country AS Country,

latitude AS Latitude,

longitude AS Longitude

FROM customerdata.fsg_data_address

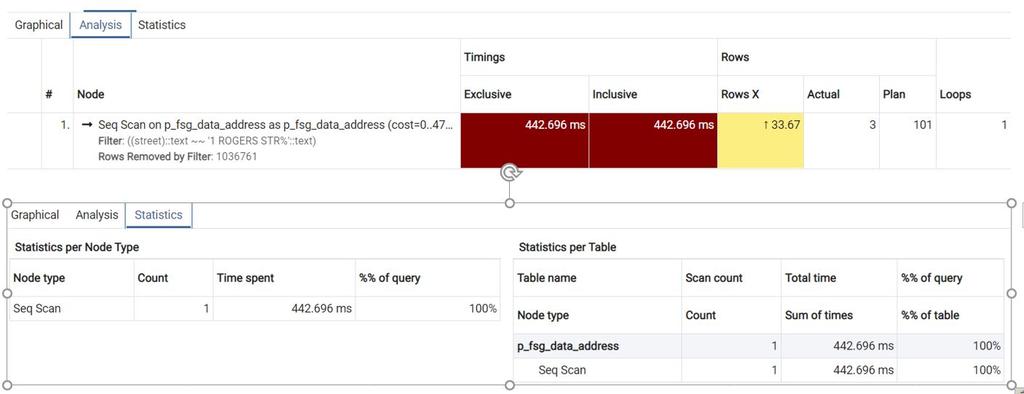

WHERE street like '1 ROGERS STR%'; - Verify and capture the statistics for the sequential scan.

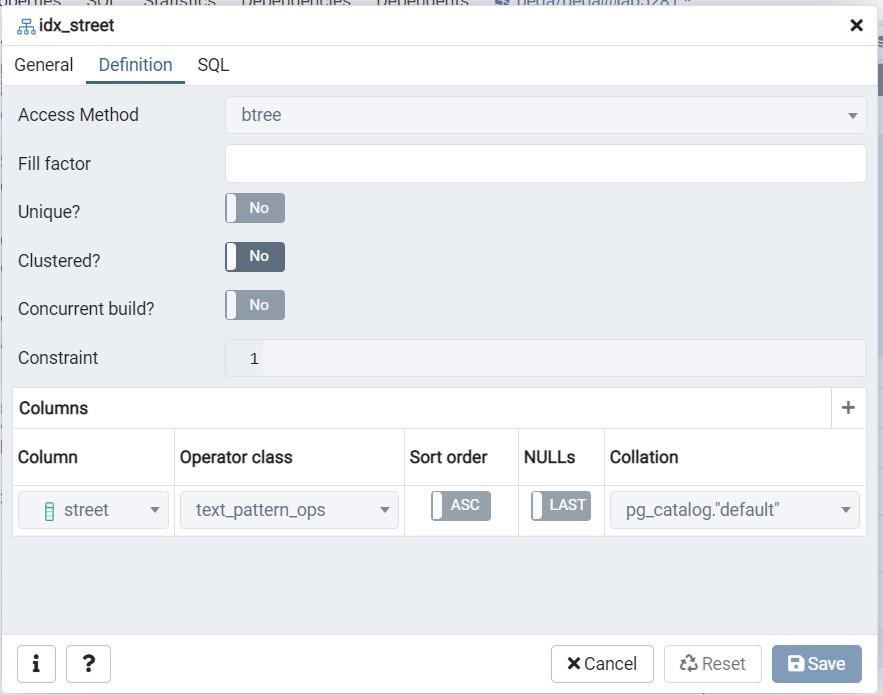

- Under pr_fsg_data_address, select Indexes.

- Right-click, and then select Create.

- Provide an index name such as idx_street or StreetIDX.

- In the Definition table, select btree.

- Add the street column to the index.

- Choose any operator class, such as text_pattern_ops.

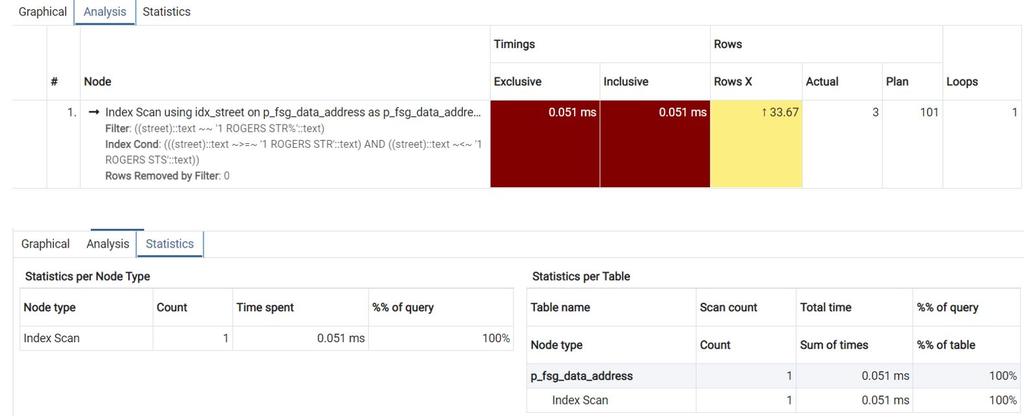

- Repeat step 6 to see significantly faster performance due to an Index Scan being used.

- Optional: Execute the following modified version of the Haversine formula query.

EXPLAIN (ANALYZE true, COSTS true, FORMAT json)

SELECT pyGUID AS pyGUID,

Reference AS Reference,

IsFor AS IsFor,

Street AS Street,

City AS City,

State AS State,

PostalCode AS PostalCode,

Country AS Country,

Latitude AS Latitude,

Longitude AS Longitude,

Distance AS Distance

FROM (

SELECT z.pyguid AS pyGUID,

z.reference AS Reference,

z.isfor AS IsFor,

z.street AS Street,

z.city AS City,

z.state AS State,

z.postalcode AS PostalCode,

z.country AS Country,

z.latitude AS Latitude,

z.longitude AS Longitude,

p.radius,

p.distanceunit * DEGREES(ACOS(COS(RADIANS(p.latpoint)) * COS(RADIANS(z.latitude)) * COS(RADIANS(p.longpoint - z.longitude)) + SIN(RADIANS(p.latpoint)) * SIN(RADIANS(z.latitude)))) AS Distance

FROM customerdata.fsg_data_address AS z

JOIN (

SELECT 42.0 AS latpoint,

-71.0 AS longpoint,

30.0 AS radius,

69.0 AS distanceunit ) AS p ON 1 = 1 ) AS d

WHERE distance <= radius

AND street LIKE '1 ROGERS STR%'

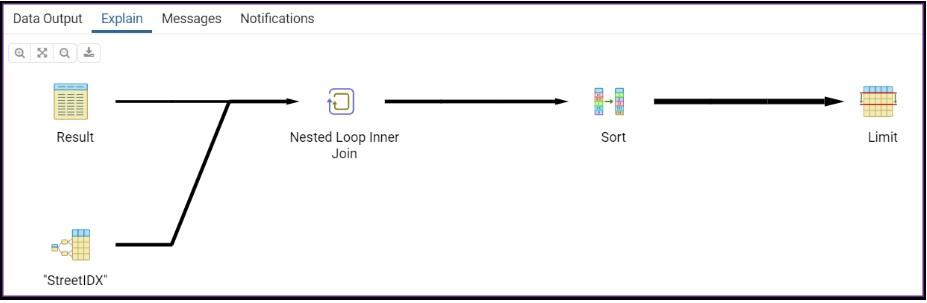

ORDER BY distanceThe following image depicts the explain statement execution of the modified version of the Haversine formula.

Note: Attempting to index the latitude and longitude columns does not improve performance because these columns are numeric. pgAdmin4 does not support the definition of indexes for numeric columns. Forcing an index on a numeric column using DDL syntax, for example, CREATE INDEX idx_lat ON customerdata.fsg_data_address(latitude), does not work; the index is ignored. - Verify that indexed columns result in significantly improved performance readings.

3 Optional: Compare performance of report definition with different sources

To complete the assignment, accomplish the following tasks.

- Load the large amount of data into the system.

- Execute the below query plan to view the statistics/analysis.

Query Plan Query Plan Output EXPLAIN (ANALYZE true, COSTS true, FORMAT json)

SELECT pyguid AS pyGUID,

reference AS Reference,

isfor AS IsFor,

street AS Street,

city AS City,

state AS State,

postalcode AS PostalCode,

country AS Country,

latitude AS Latitude,

longitude AS Longitude

FROM pegadata.p_fsg_data_address

WHERE street like '1 ROGERS STR%';

[

{

"Plan": {

"Node Type": "Seq Scan",

"Relation Name": "p_fsg_data_address",

"Alias": "p_fsg_data_address",

"Startup Cost": 0,

"Total Cost": 47526.55,

"Plan Rows": 101,

"Plan Width": 139,

"Actual Startup Time": 128.282,

"Actual Total Time": 417.202,

"Actual Rows": 3,

"Actual Loops": 1,

"Filter": "((street)::text ~~ '1 ROGERS STR%'::text)",

"Rows Removed by Filter": 1036761

},

"Planning Time": 0.241,

"Triggers": [],

"Execution Time": 417.292

}

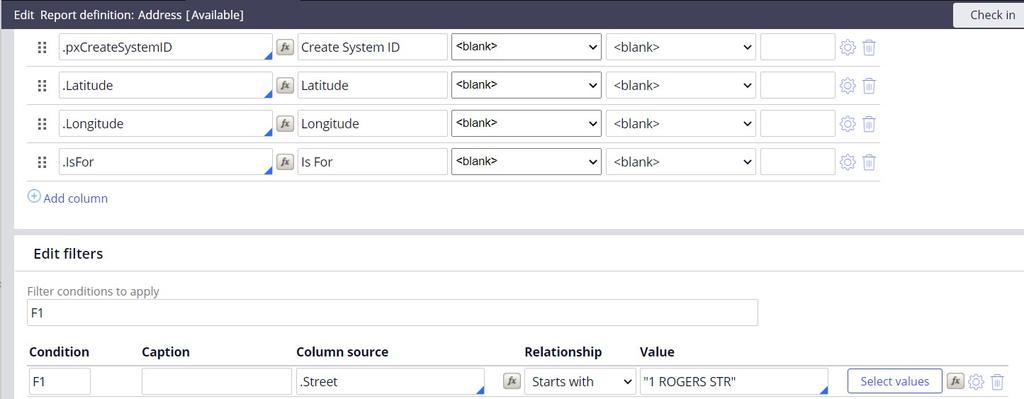

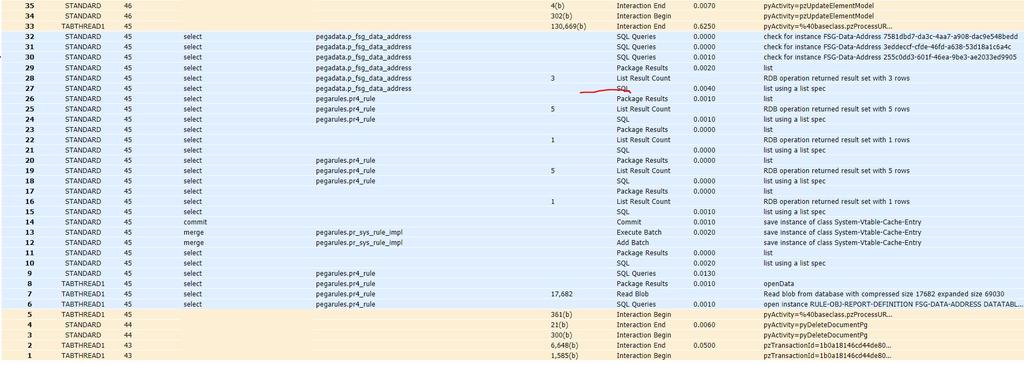

] - Run the report definition with the same filter criteria from the query plan.

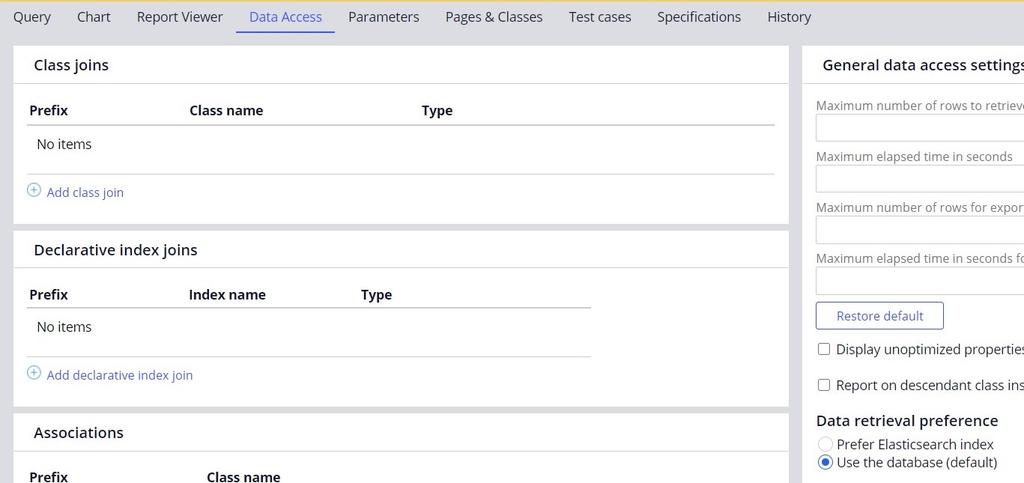

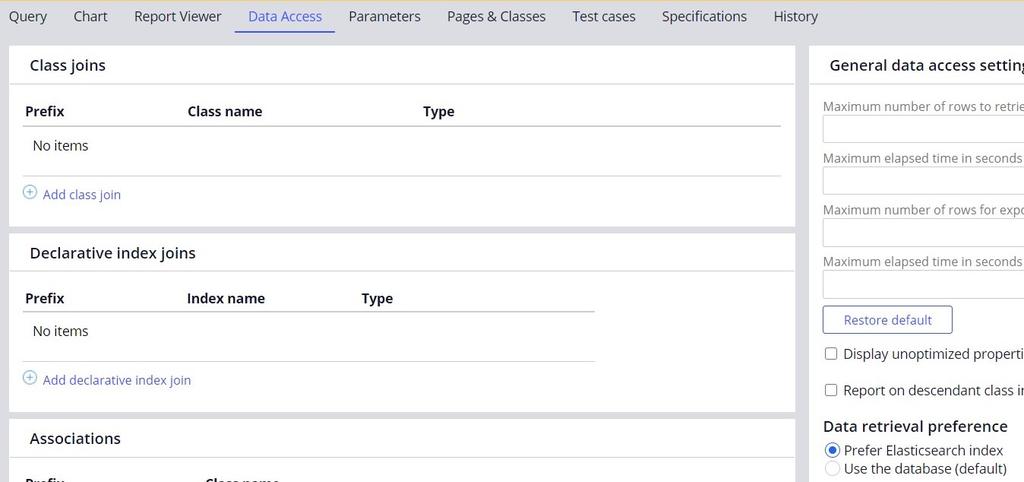

- Verify that data retrieval preference is set to use the database (default).

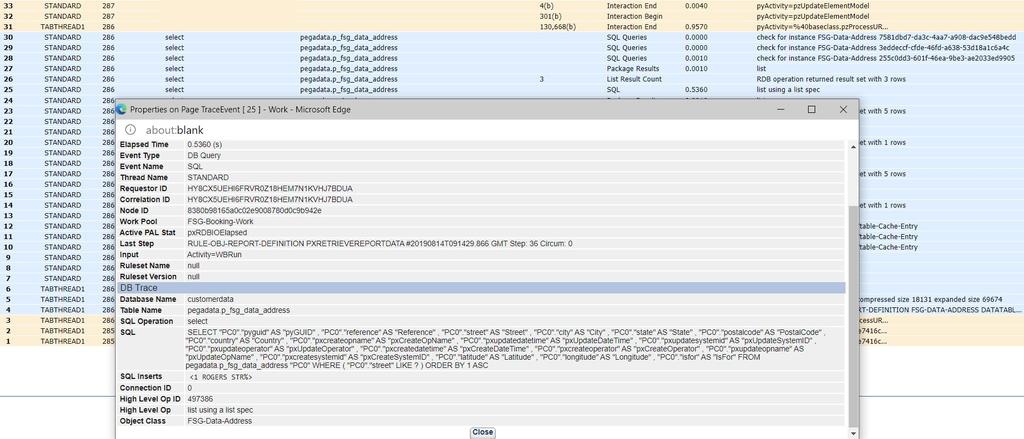

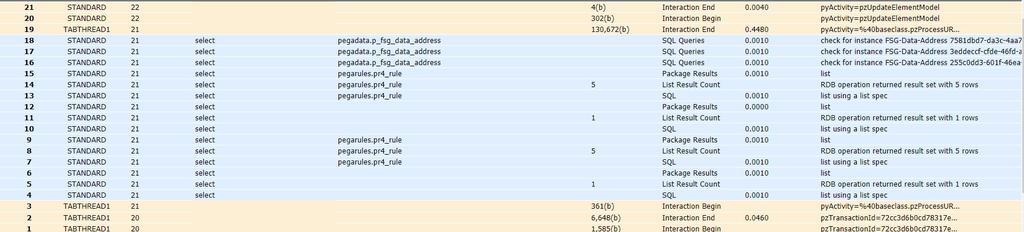

- Start the Tracer tool and run the report definition to verify the elapsed time in the Tracer.

- Create the index for the required columns (for example, Street).

- Following the index creation for the column, execute the query plan to view the analysis/statistics.

- Start the Tracer tool and run the earlier configured report definition to view the elapsed time in the Tracer.

- Compare the time spent and the elapsed time for the query before and after index creation (step 5 versus step 8).

- Next, try the report using elastic search index. You will need to modify the report and create an elastic search index.

- Update the report definition data retrieval preference to Prefer elastic search index.

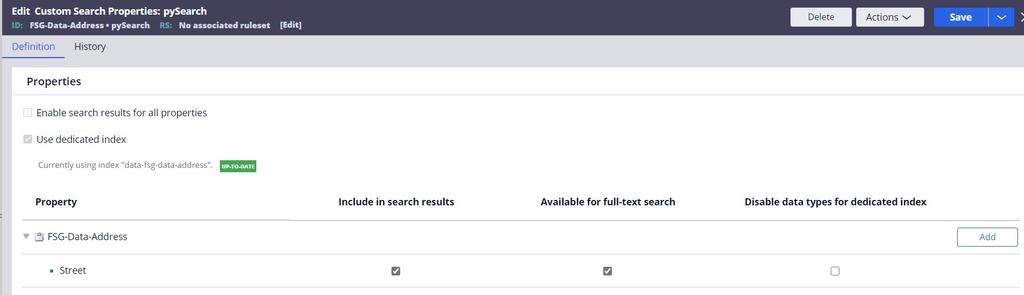

- Create a custom search property rule and add the required column/property.

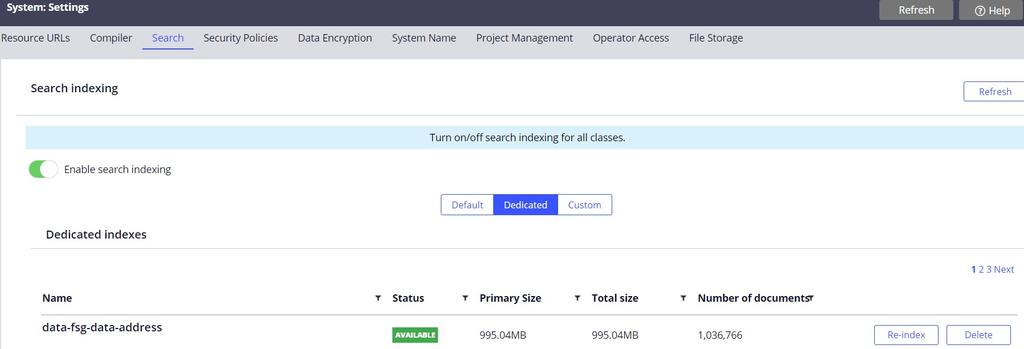

- Create the dedicated index.

- Verify that the required dedicated index is available. If not, index or re-index to make it available.

- Start the Tracer tool, and run the report definition using elastic search to view elapsed time in the Tracer.

- Compare the elapsed time of interaction end of database retrieval vs elastic search index (step 8 vs step 14).

The report definition that is sourced by an elastic search index retrieves results faster than sourced by a database.

Available in the following mission:

If you are having problems with your training, please review the Pega Academy Support FAQs.

Want to help us improve this content?