Scenario testing

Use UI-based functional tests and end-to-end scenario tests to verify that end-to-end cases work as expected. With the UI-based scenario testing tool, developers can create functional and useful tests for single-page applications (SPAs) rather than write complex code. Access to the scenario test feature is granted with the @baseclass pxScenarioTestAutomation privilege. This privilege is available with the out-of-the-box PegaRULES:SysAdm4 role.

Tests are saved in a dedicated test ruleset that is defined in the application rule.

Note: For more details on test rulesets, see Creating a test ruleset to store test cases. To learn more about test suites, see Grouping scenario tests into suites.

Scenario tests for features

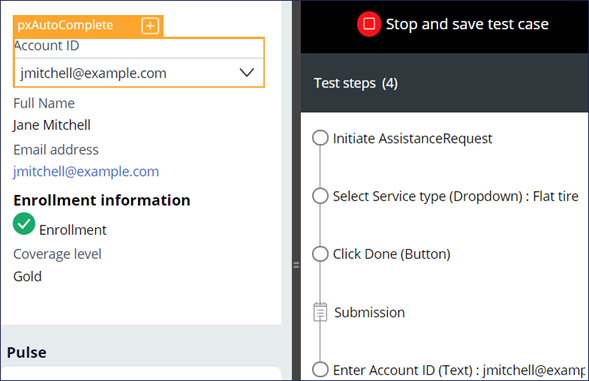

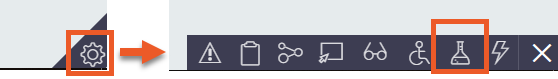

With scenario testing, you can create UI-based, end-to-end scenarios to test your application. The user who has access to the run-time toolbar captures scenario tests in the context of the application portal. In the Dev Studio application portal, use the menu to select and navigate to the specific portal (such as the User Portal) to initiate test recording. Use the on the run-time toolbar to create or modify a scenario test.

Specify either a Case type or Portal scenario test when you start the test recorder.

Note: Running scenario tests from Deployment Manager requires the use of a Selenium runner. For more information, see the Deploying revisions with Deployment Manager. You can also run scenario tests from other pipeline tools by using the associated Pega API. For more information, see the Pega RESTful API for remote execution of scenario tests discussion post.

In the following image, click the + icons to learn more about the Automation Recorder capabilities:

Check your knowledge with the following interaction:

Explicit assertions and scenario tests

Interactions are recorded in a visual series of steps, and the completion of a test step might include a delay. When you use the automation recorder and hover over an element, the orange highlight box indicates a supported user interface element that you can test.

The Mark for assertion icon on the orange highlight box provides two features:

- The wait times that follow an action within the test scenario.

- The ability to create validation steps in the test scenario.

Use explicit assertions to define expected outputs to validate business or testing requirements for your application. For example, requirements state that if a customer type property is equal to Gold, then a 10% discount is applied to the customer order. If the test does not pass, the results indicate where the 10% is not applied.

Each validation is completed while running the test scenario. The addition of wait times provides the application with extra processing time when steps are expected to have slow response times. The wait time feature is essential to ensure that all the steps of a test script do not fail due to the slow response time of a single step.

Scenario testing landing page

After saving, tests are available on the Application: Scenario testing landing page. The landing page is accessible from the header of Dev Studio (Configure > Application > Quality > Automated Testing > Scenario Testing> Test Cases).

The scenario testing landing page provides a graphical test creation tool that you can use to increase test coverage without writing complex code. You can view and run scenario test cases. By viewing reports, you can also identify case types and portals that did not pass scenario testing.

In the following image, click the + icons to learn more about the various features of the landing page:

Note: For more information about running scenario test suites from the landing page, see Running scenario test suites.

Best practices for scenario testing

Create scenario tests for a specific purpose, such as smoke testing and regression testing. Before running tests, log in and create case types manually to cache pages and render them faster in subsequent runs.

To improve the application quality, a project lead or lead system architect (LSA) periodically reviews the scenario testing results and performs the following actions:

- Reviews the failed test cases and take corrective actions.

- Adds more test cases for case type and portal rules to increase test coverage.

- Reviews and instructs team members to update any existing test cases because of the impact of newly introduced functional changes.

Performing actions in tests

After starting the recording, perform actions slowly because scenario testing captures live input. Wait until the page or page element, such as a dropdown or section, refreshes completely before recording the next step. Similarly, wait for any activity that is associated with a click action to refresh before performing any subsequent click actions.

You can see when a step is updated in the recording panel. If something goes wrong while recording a scenario test, cancel the test and restart the recording.

If you need to record an element that is behind the recording panel, collapse the recording panel while recording. After recording, expand the recording panel again to view the recorded steps.

Note: Collapsing and expanding the recording panel is not recorded as a step.

Do not use autofill to enter data in forms, as the selection might negatively affect the test. Additionally, updating data-test-ids for any element in an existing section fails the scenario test. If you need to update the data-test-id, recreate or update the test case.

Limitations of scenario testing

Scenario tests must run in the same portal in which you record them. For example, after you start the scenario test in a manager portal, you cannot run the test from the user mobile portal. Run separate scenario tests for each portal that you need to test. You cannot run scenario tests by using different personas or logins because testing ends when you log out, and you cannot run the same test with a different user.

Other limitations include:

- File uploads or downloads are not supported because this requires interaction with the operating system.

- CSS styles that are related to hover are not available during scenario testing, such as on-hover actions.

- A scenario test cannot include another scenario test.

- Scenario tests do not have support for the setup or cleanup of test data.

Check your knowledge with the following interaction:

This Topic is available in the following Module:

If you are having problems with your training, please review the Pega Academy Support FAQs.

Want to help us improve this content?