Docker and Kubernetes

When software is developed and tested in one computing environment and then migrated to another, issues might arise if the supporting software environment is not identical (for example, when software is developed on a laptop and moved to a virtual machine (VM) or Linux to a Windows operating system).

Software containers solve migration problems that can occur when an application moves from one environment to another environment.

Containers encapsulate an application as a single bundle and other related binaries, libraries, configuration files, and any dependencies that are required for its execution. You can use containers to run a small microservice or large application.

Common use cases for containers include:

- Existing applications that can be lifted and shifted into the latest cloud architectures.

- Repetitive jobs and tasks, which can be quickly handled with new containers.

The following list shows examples of popular container engine software:

- Docker

- AWS Fargate

- LXC Linux Containers

- Rkt

- Apache Mesos

Docker

Docker engine is an open-source containerization technology for building and containerizing applications.

It can be used for fast and consistent delivery of applications, deployment, and scaling, and running more workloads on the same hardware.

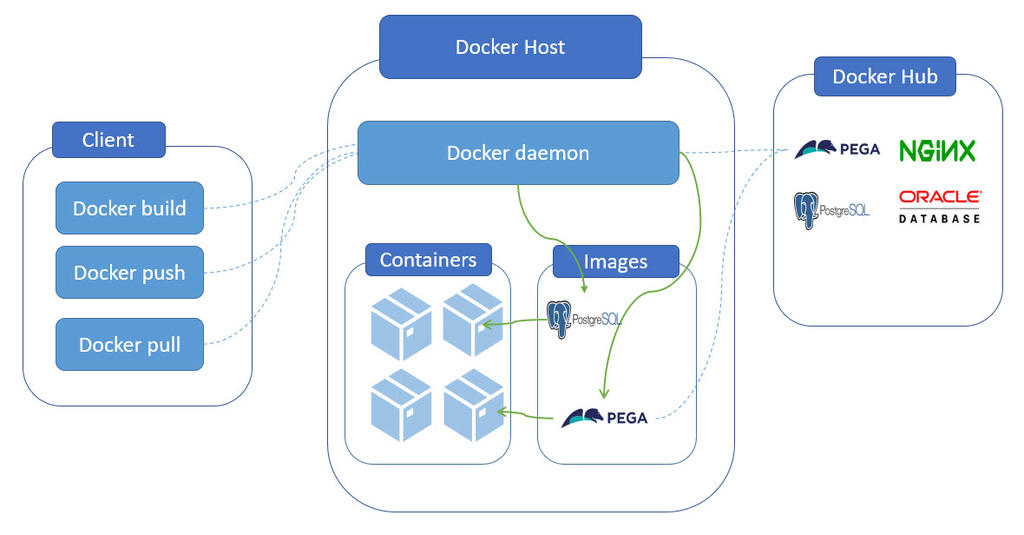

The Docker engine uses client-server architecture with a Docker client and Docker daemon (Docker Host) that uses REST API communication. The Docker registry (Docker Hub) stores public and private docker images.

When Pega Platform is installed, it requires the use of a web server and database server. Each of these servers run on their own containers: one for the application server and another for the database server. The database server and the necessary libraries and other components are contained in its first container. The Tomcat application server and a database driver library file for database connection are installed in the second container.

In the Pega Platform™ installation example, you can see the following benefits when using containers such as Docker:

- Scale up or down webservers automatically based on balancing workloads.

- Automatically create a new web server when the old server fails.

- Monitor the container health.

Container orchestration

Container orchestration comes into play when the number of containers in a pipeline grows considerably, notably in the continuous integration and continuous delivery (CI/CD) pipeline. Container orchestration is a method to manage, schedule, load-balance, network, and monitor containers that are automated.

Popular container orchestration tools include:

- Kubernetes

- Docker Swarm

- Apache Mesos

Kubernetes

Kubernetes is an open-source container orchestration engine that automates the deployment, scaling management of containers. The open-source project is hosted by the Cloud Native Computing Foundation (CNCF).

Let us get familiarity with some of the terminologies used for Kubernetes in the context of orchestration of Pega Platform.

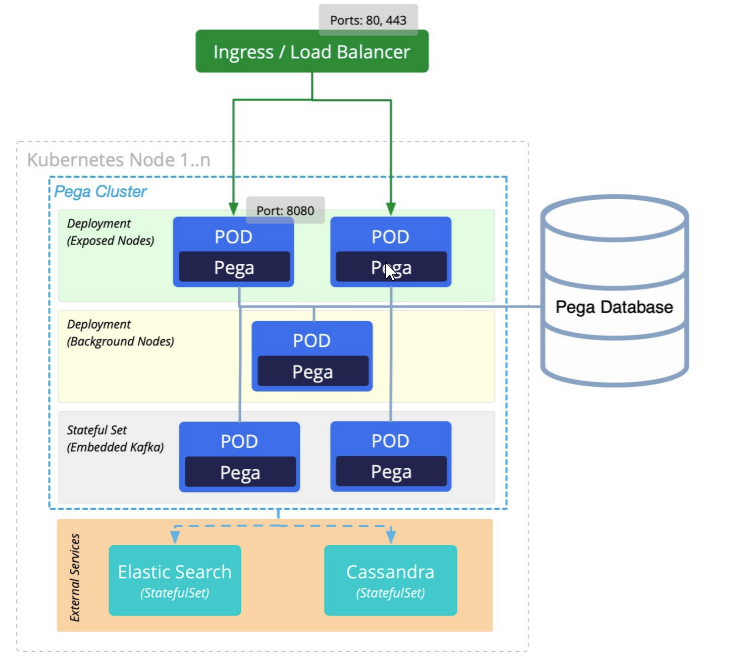

Orchestration of Pega platform starts with ‘Pods’. ‘Pods’ are docker images provided by Pega. We can define the logical set of ‘Pods’ and policy to access them using Kubernetes. External access can also be managed using ‘Ingress’. Kubernetes also supports the configuration changes using ‘ConfigMap’, it can utilize XML-based objects like the prconfig.xml file.

This Topic is available in the following Module:

If you are having problems with your training, please review the Pega Academy Support FAQs.

Want to help us improve this content?